ReZero: Region-customizable Sound Extraction

Rongzhi Gu, Yi Luo

Abstract:

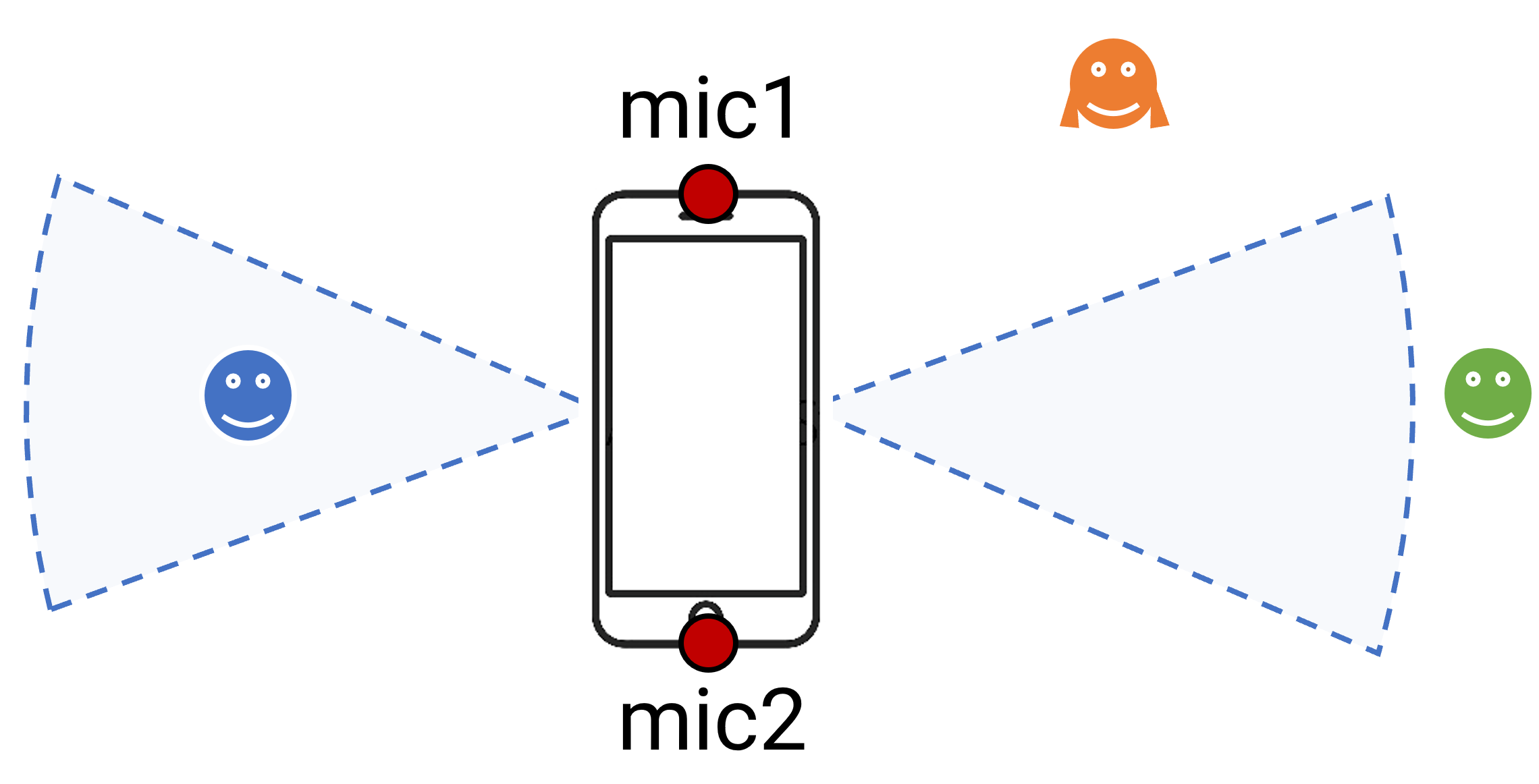

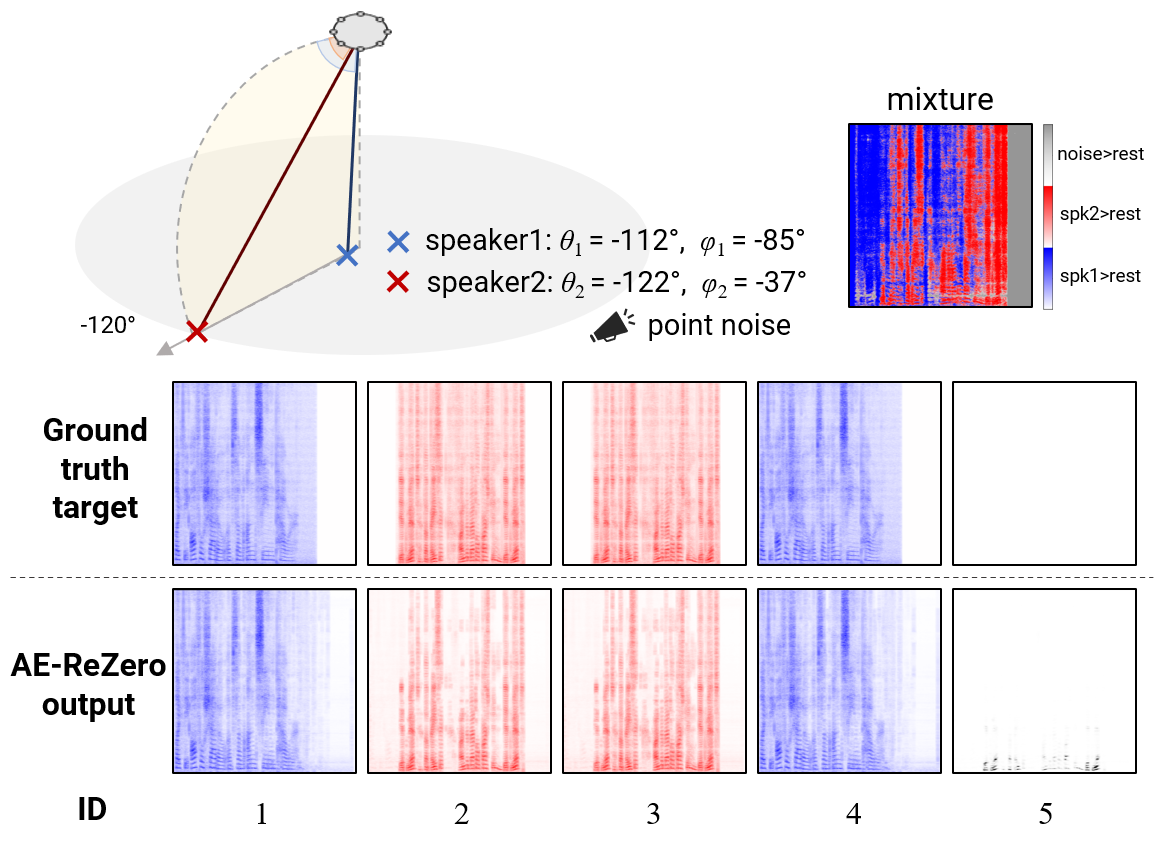

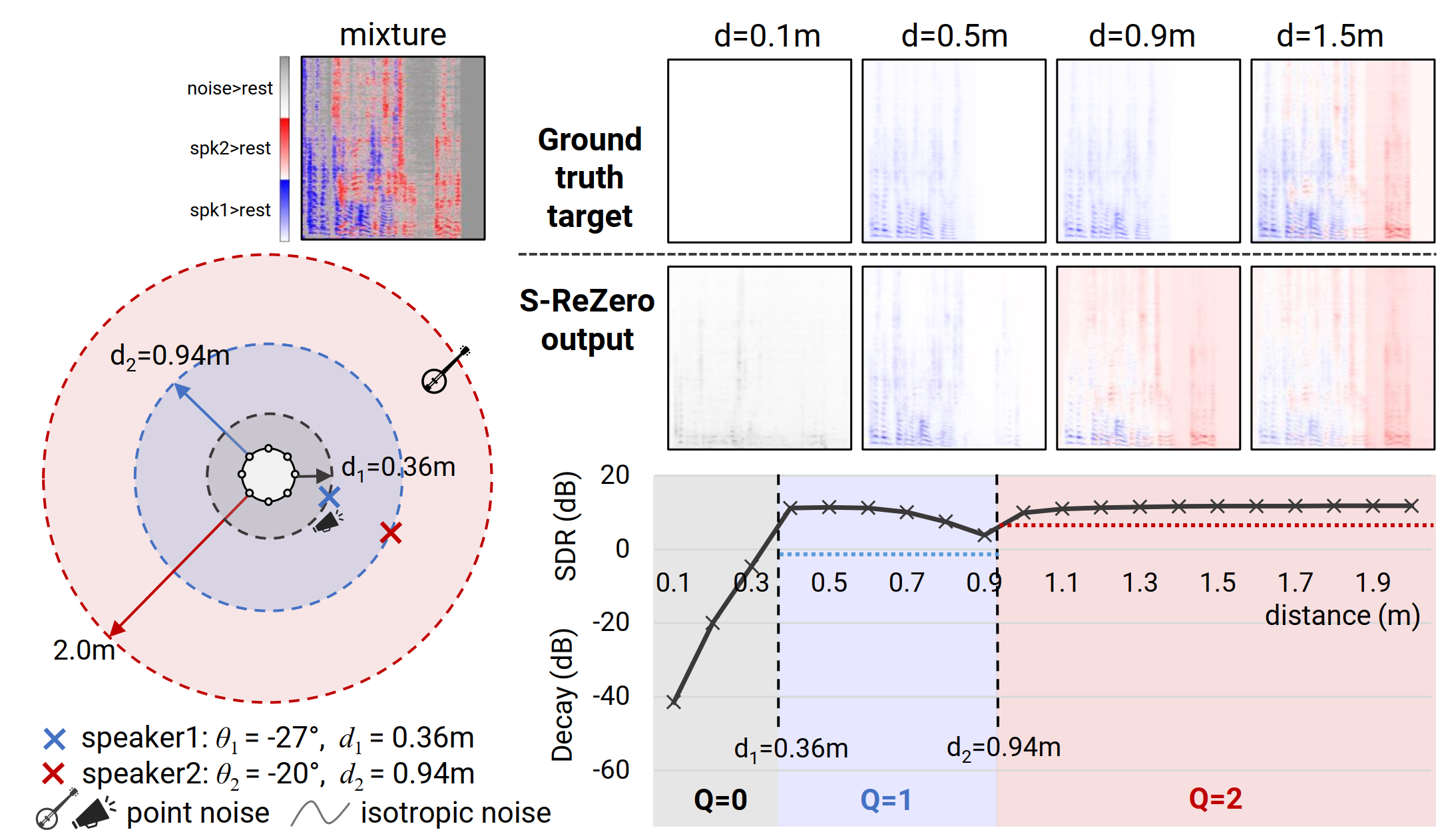

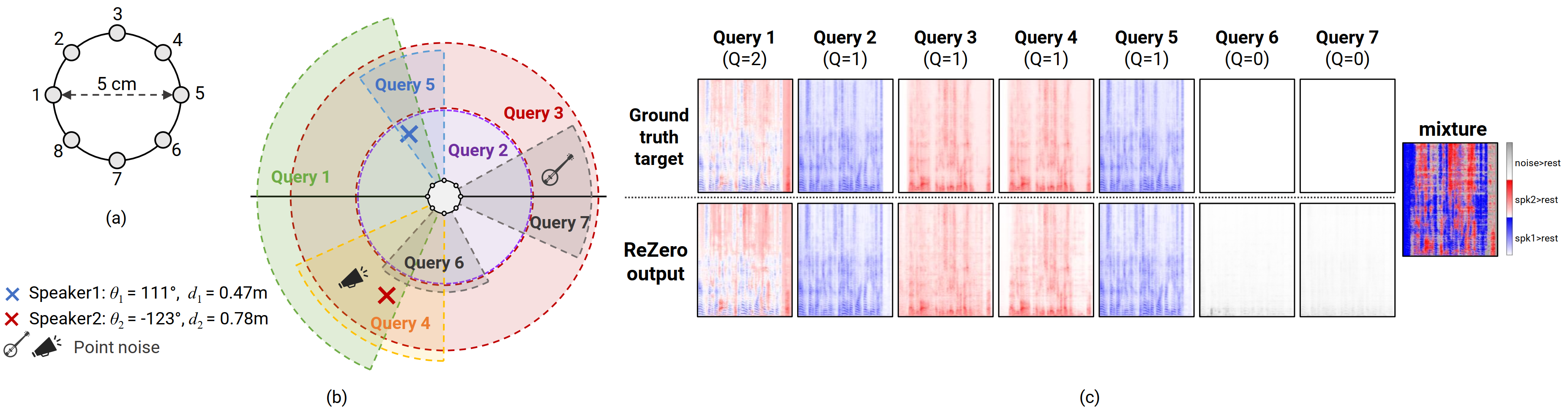

We introduce region-customizable sound extraction (ReZero),

a general and flexible framework for the multi-channel region-wise sound extraction (R-SE) task.

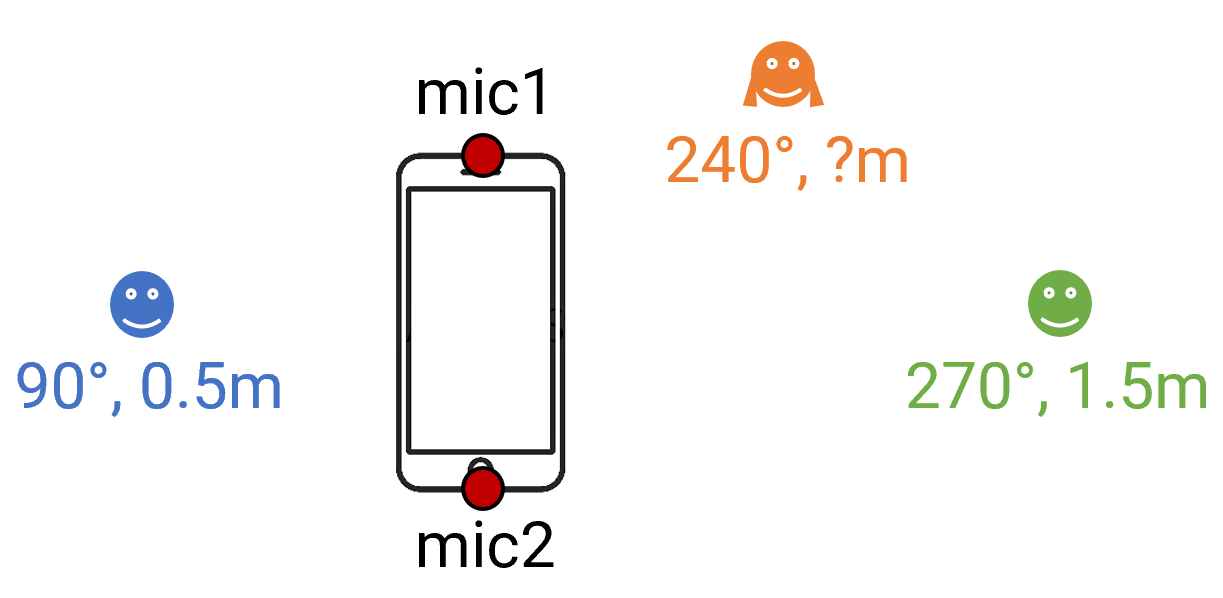

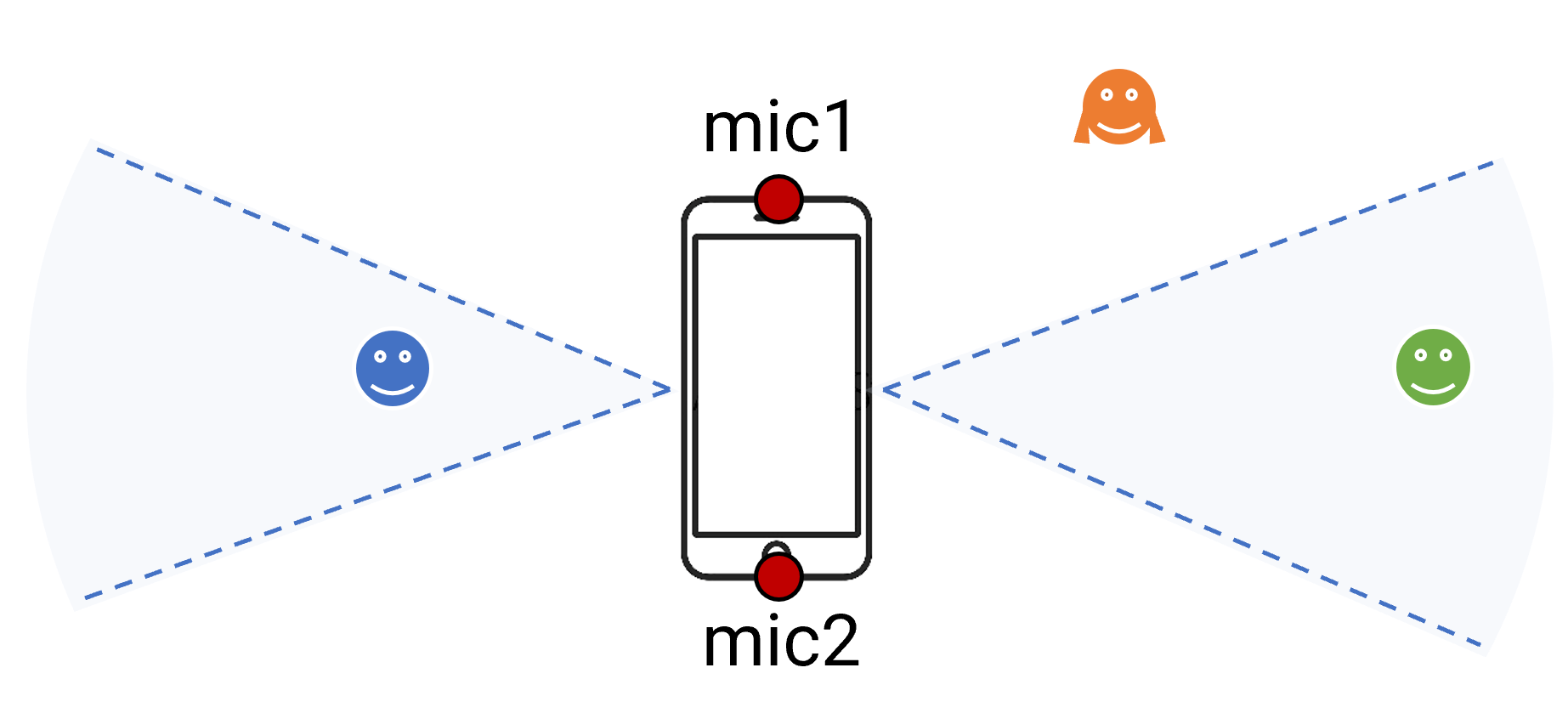

R-SE task aims at extracting all active target sounds (e.g., human speech) within a specific, user-defined spatial region,

which is different from conventional and existing tasks where a blind separation or a fixed, predefined spatial region are typically assumed.

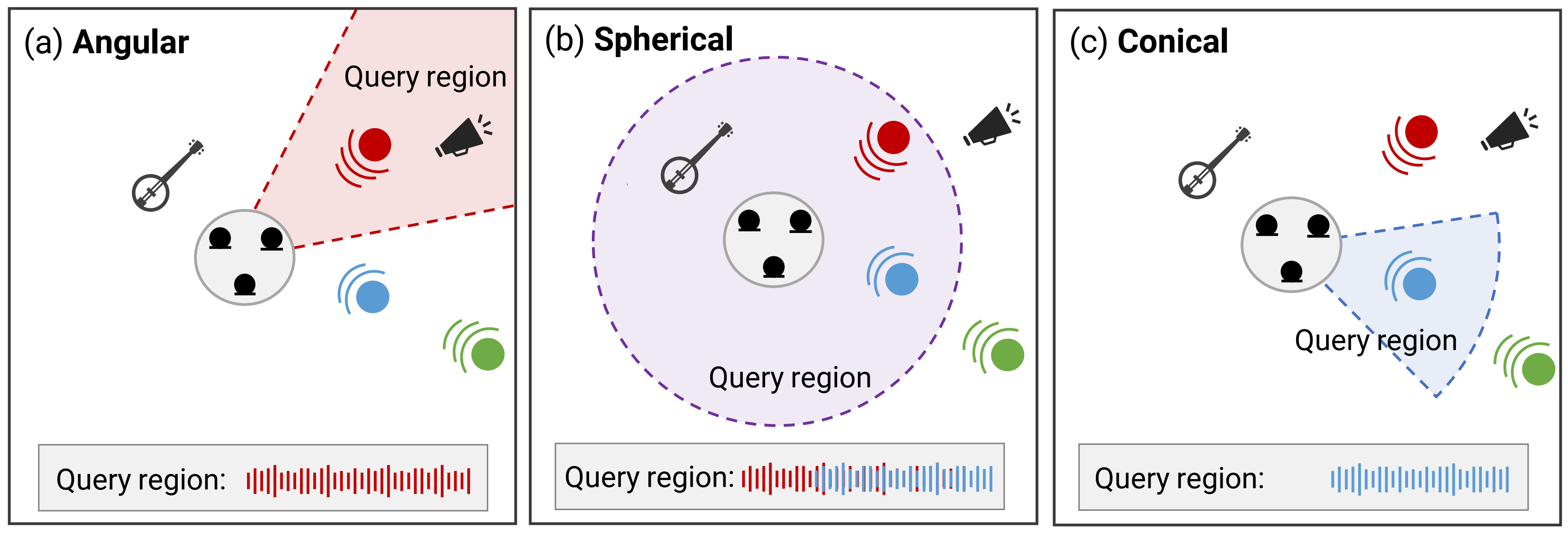

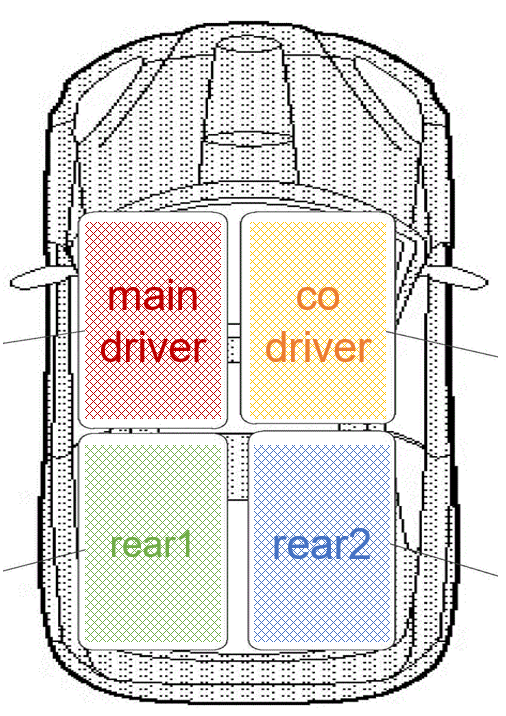

The spatial region can be defined as an angular window, a sphere, a cone, or other geometric patterns.

Being a solution to the R-SE task, the proposed ReZero framework includes

(1) definitions of different types of spatial regions,

(2) methods for region feature extraction and aggregation, and

(3) a multi-channel extension of the band-split RNN (BSRNN) model specified for the R-SE task.

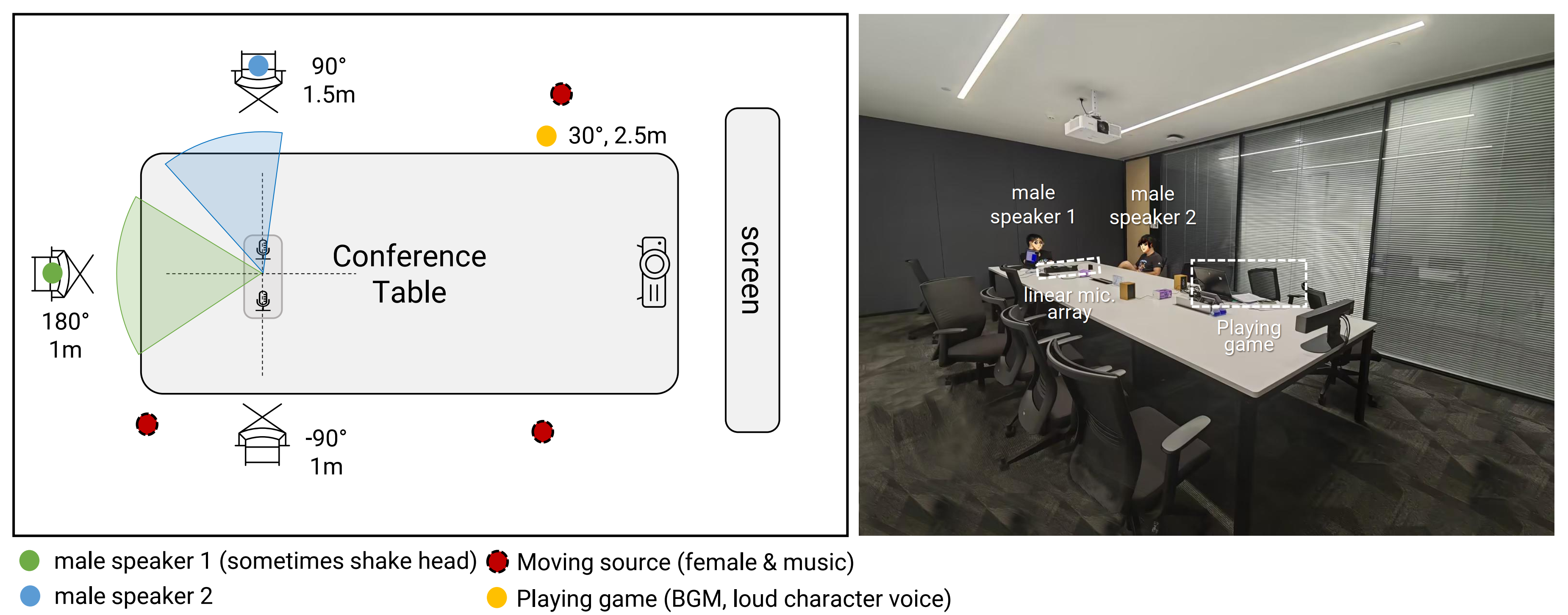

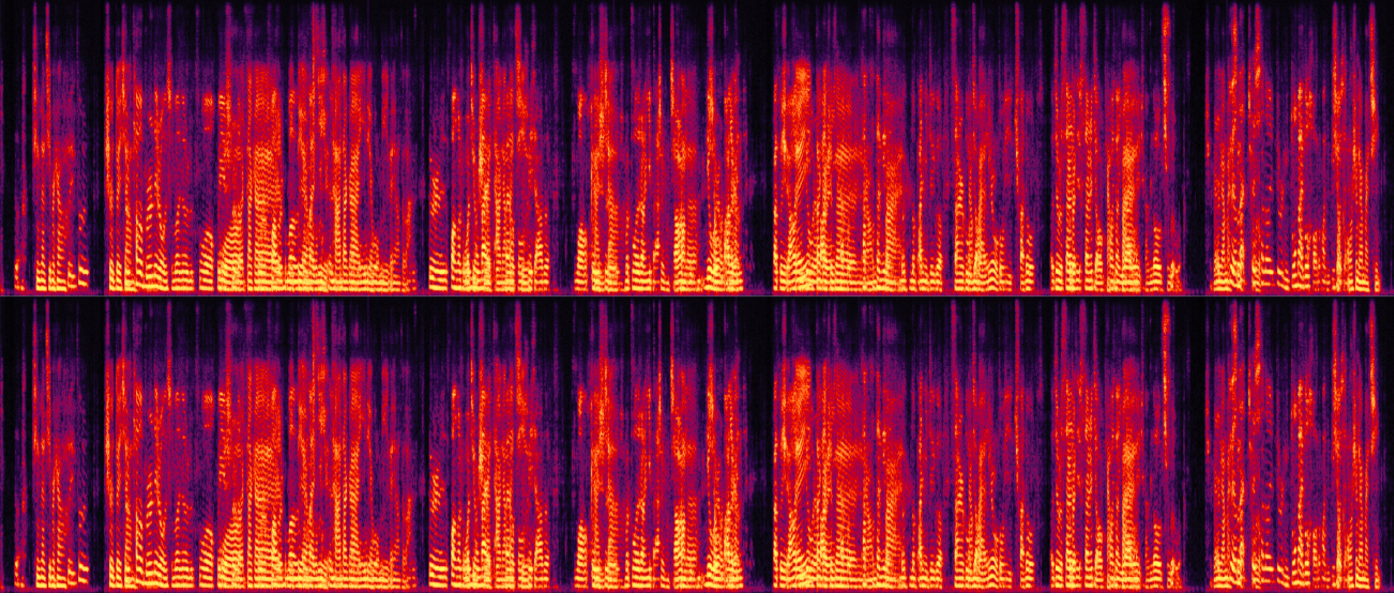

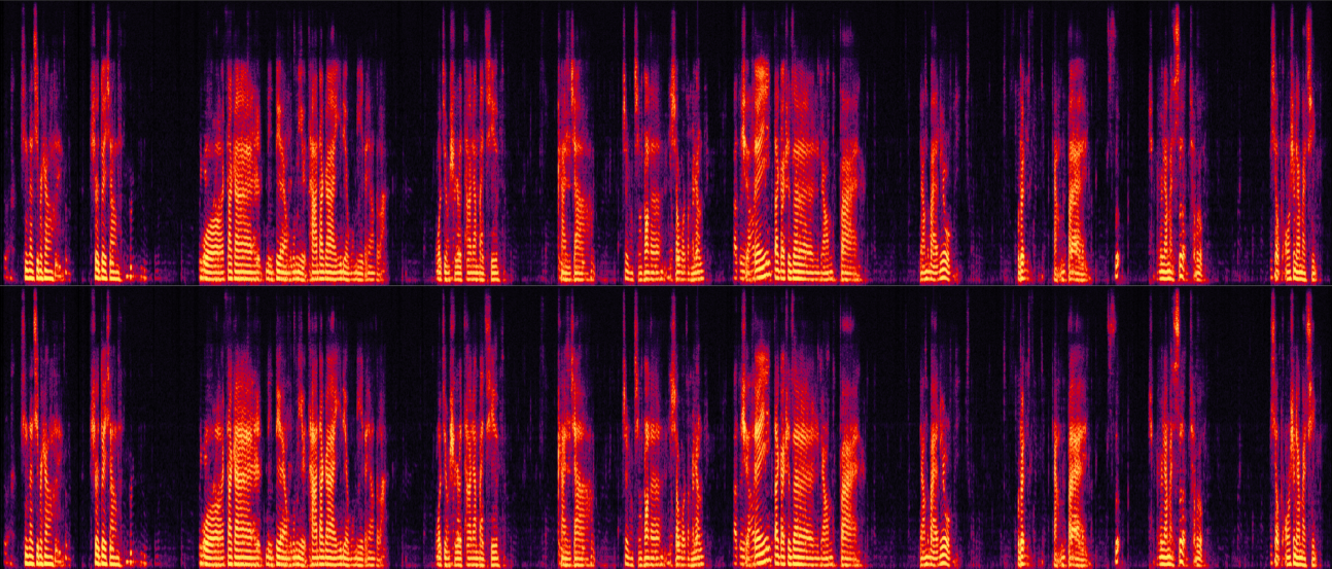

We design experiments for different microphone array geometries, different types of spatial regions,

and comprehensive ablation studies on different system configurations.

Experimental results on both simulated and real-recorded data demonstrate the effectiveness of ReZero.

[PDF]